开发DeepSeek 对话UI

2024年5月15日...大约 6 分钟

一、技术架构

在有了 AI 工具以后,对后端程序员来说,之前比较繁琐头疼的前端页面,现在非常轻松的就能实现了。

只要我们可以清楚地表达编写页面诉求,AI 工具就可以非常准确且迅速的完成代码的实现。

这里我们可以选择的 AI 有很多,包括;OpenAI、DeepSeek、智谱AI等等。

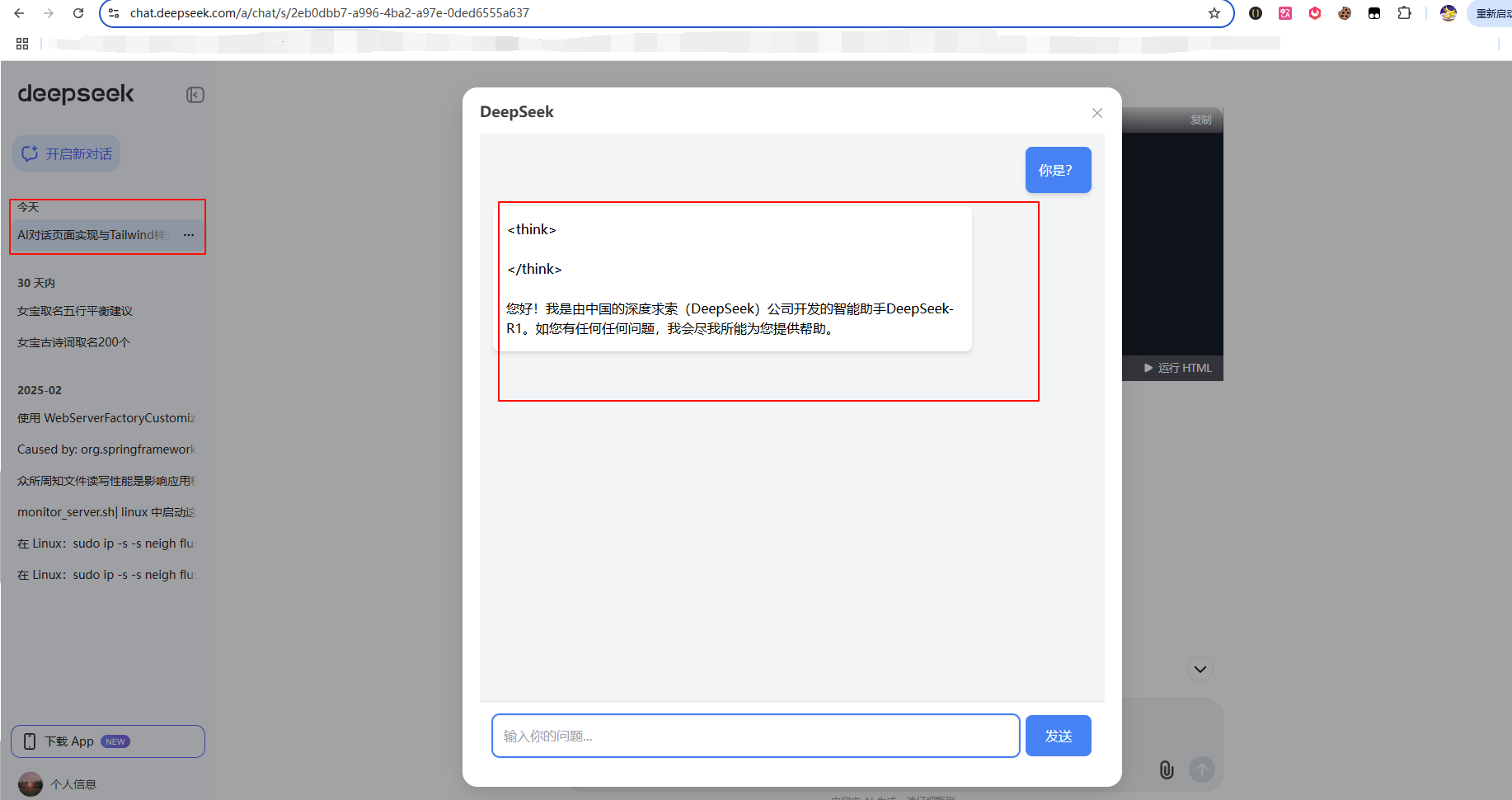

- https://chat.deepseek.com/

- https://v0.dev/

- https://claude.ai/【编程最牛】

- https://chatglm.cn/

- https://openai.itedus.cn/

现在市面上的AI 工具有很多,多搜索找几款,总有一款是可以使用的。

二、功能开发

1.话术素材

1.1 服务端接口

curl 'http://localhost:7080/api/v1/ollama/generate_stream?model=deepseek-r1:1.5b&message=1%2B1'告诉 AI 你的接口请求方式,这里是 GET 请求。

1.2 回答结果

[

{

"result": {

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": "1"

}

},

"results": [

{

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": "1"

}

}

],

"metadata": {

"id": "",

"model": "deepseek-r1:1.5b",

"rateLimit": {

"tokensLimit": 0,

"tokensReset": "PT0S",

"requestsLimit": 0,

"tokensRemaining": 0,

"requestsReset": "PT0S",

"requestsRemaining": 0

},

"usage": {

"promptTokens": 0,

"completionTokens": 0,

"totalTokens": 0

},

"promptMetadata": [],

"empty": false

}

},

{

"result": {

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": " +"

}

},

"results": [

{

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": " +"

}

}

],

"metadata": {

"id": "",

"model": "deepseek-r1:1.5b",

"rateLimit": {

"tokensLimit": 0,

"tokensReset": "PT0S",

"requestsLimit": 0,

"tokensRemaining": 0,

"requestsReset": "PT0S",

"requestsRemaining": 0

},

"usage": {

"promptTokens": 0,

"completionTokens": 0,

"totalTokens": 0

},

"promptMetadata": [],

"empty": false

}

},

{

"result": {

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": " "

}

},

"results": [

{

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": " "

}

}

],

"metadata": {

"id": "",

"model": "deepseek-r1:1.5b",

"rateLimit": {

"tokensLimit": 0,

"tokensReset": "PT0S",

"requestsLimit": 0,

"tokensRemaining": 0,

"requestsReset": "PT0S",

"requestsRemaining": 0

},

"usage": {

"promptTokens": 0,

"completionTokens": 0,

"totalTokens": 0

},

"promptMetadata": [],

"empty": false

}

},

{

"result": {

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": "1"

}

},

"results": [

{

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": "1"

}

}

],

"metadata": {

"id": "",

"model": "deepseek-r1:1.5b",

"rateLimit": {

"tokensLimit": 0,

"tokensReset": "PT0S",

"requestsLimit": 0,

"tokensRemaining": 0,

"requestsReset": "PT0S",

"requestsRemaining": 0

},

"usage": {

"promptTokens": 0,

"completionTokens": 0,

"totalTokens": 0

},

"promptMetadata": [],

"empty": false

}

},

{

"result": {

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": " 等"

}

},

"results": [

{

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": " 等"

}

}

],

"metadata": {

"id": "",

"model": "deepseek-r1:1.5b",

"rateLimit": {

"tokensLimit": 0,

"tokensReset": "PT0S",

"requestsLimit": 0,

"tokensRemaining": 0,

"requestsReset": "PT0S",

"requestsRemaining": 0

},

"usage": {

"promptTokens": 0,

"completionTokens": 0,

"totalTokens": 0

},

"promptMetadata": [],

"empty": false

}

},

{

"result": {

"metadata": {

"finishReason": "stop",

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": ""

}

},

"results": [

{

"metadata": {

"finishReason": "stop",

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": ""

}

}

],

"metadata": {

"id": "",

"model": "deepseek-r1:1.5b",

"rateLimit": {

"tokensLimit": 0,

"tokensReset": "PT0S",

"requestsLimit": 0,

"tokensRemaining": 0,

"requestsReset": "PT0S",

"requestsRemaining": 0

},

"usage": {

"promptTokens": 6,

"completionTokens": 17,

"totalTokens": 23

},

"promptMetadata": [],

"empty": false

}

}

]注意:正式返回的 json 文件很大。在这里做了截取。能体现出应答和结束标识

STOP即可。内容:从 result.output.text获取

结束:从 result.metadata.finishReason = STOP 代表结束

2. 提示词整理

请根据以下信息,编写UI对接服务端接口;

流式GET请求接口,由 SpringBoot Spring AI 框架实现,如下;

curl 'http://localhost:7080/api/v1/ollama/generate_stream?model=deepseek-r1:1.5b&message=1%2B1'

@GetMapping("generate_stream")

@Override

public Flux<ChatResponse> generateStream(@RequestParam String model, @RequestParam String message) {

return chatModel.stream(new Prompt(message, OllamaOptions.builder().model(model).build()));

}

流式GET应答数据,数组中的一条对象;

{

"result": {

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": "1"

}

},

"results": [

{

"metadata": {

"finishReason": null,

"contentFilters": [],

"empty": true

},

"output": {

"messageType": "ASSISTANT",

"metadata": {

"messageType": "ASSISTANT"

},

"toolCalls": [],

"media": [],

"text": "1"

}

}

],

"metadata": {

"id": "",

"model": "deepseek-r1:1.5b",

"rateLimit": {

"tokensLimit": 0,

"tokensReset": "PT0S",

"requestsLimit": 0,

"tokensRemaining": 0,

"requestsReset": "PT0S",

"requestsRemaining": 0

},

"usage": {

"promptTokens": 0,

"completionTokens": 0,

"totalTokens": 0

},

"promptMetadata": [],

"empty": false

}

}

如描述说明,帮我编写一款高颜值的AI对话页面。

1. 输入内容,点击发送按钮,调用服务端流式请求接口,前端渲染展示。

2. 使用html、js代码方式实现,css样式使用 tailwind 编写。

3. 通过 const eventSource = new EventSource(apiUrl); 调用api接口。

4. 从 result.output.text 获取,应答的文本展示。注意 text 可能为空。

5. 从 result.metadata.finishReason = stop 获取,结束标识。

6. 注意整体样式的简洁美观。DeepSeek: https://chat.deepseek.com/a/chat

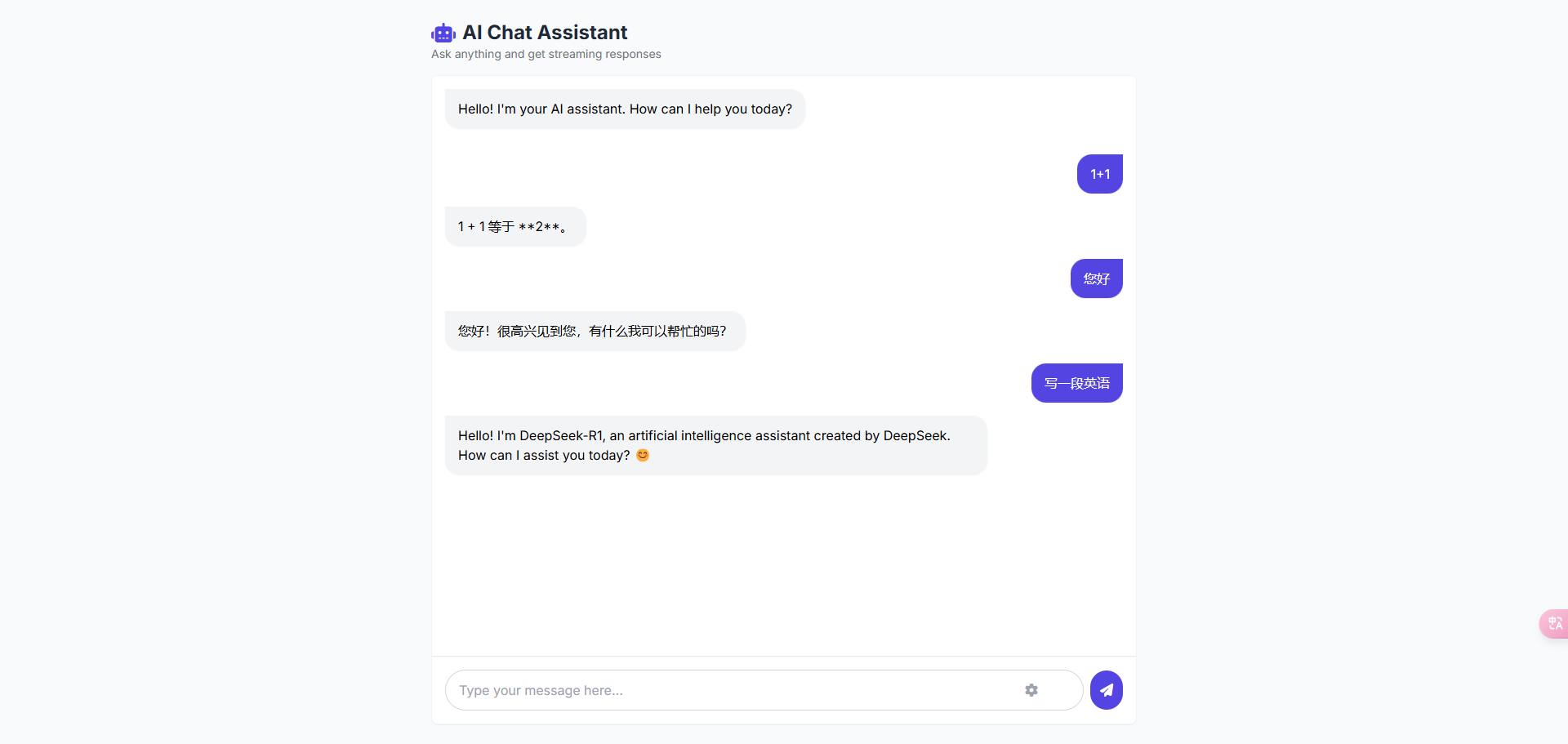

3. 最终实现html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>AI Chat Interface</title>

<script src="https://cdn.tailwindcss.com"></script>

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/font-awesome/6.4.0/css/all.min.css">

<style>

@import url('https://fonts.googleapis.com/css2?family=Inter:wght@300;400;500;600;700&display=swap');

body {

font-family: 'Inter', sans-serif;

}

.typing-indicator {

display: inline-flex;

align-items: center;

}

.typing-indicator span {

height: 8px;

width: 8px;

margin: 0 1px;

background-color: #9ca3af;

border-radius: 50%;

display: inline-block;

animation: bounce 1.4s infinite ease-in-out both;

}

.typing-indicator span:nth-child(1) {

animation-delay: -0.32s;

}

.typing-indicator span:nth-child(2) {

animation-delay: -0.16s;

}

@keyframes bounce {

0%, 80%, 100% {

transform: scale(0);

} 40% {

transform: scale(1.0);

}

}

.message-container {

max-height: calc(100vh - 180px);

}

.ai-message {

background-color: #f3f4f6;

border-radius: 0 18px 18px 18px;

}

.user-message {

background-color: #4f46e5;

color: white;

border-radius: 18px 0 18px 18px;

}

.message {

max-width: 80%;

margin-bottom: 16px;

padding: 12px 16px;

animation: fadeIn 0.3s ease-in-out;

box-shadow: 0 1px 2px rgba(0, 0, 0, 0.05);

}

@keyframes fadeIn {

from { opacity: 0; transform: translateY(10px); }

to { opacity: 1; transform: translateY(0); }

}

</style>

</head>

<body class="bg-gray-50 h-screen flex flex-col">

<div class="container mx-auto px-4 py-6 flex flex-col h-full max-w-4xl">

<header class="mb-4">

<h1 class="text-2xl font-bold text-gray-800 flex items-center">

<i class="fas fa-robot text-indigo-600 mr-2"></i>

AI Chat Assistant

</h1>

<p class="text-gray-500 text-sm">Ask anything and get streaming responses</p>

</header>

<div class="flex-1 overflow-hidden flex flex-col bg-white rounded-lg shadow-sm border border-gray-100">

<div id="message-container" class="message-container flex-1 overflow-y-auto p-4">

<div class="flex flex-col space-y-4">

<div class="ai-message message self-start">

<p>Hello! I'm your AI assistant. How can I help you today?</p>

</div>

</div>

</div>

<div class="border-t border-gray-200 p-4">

<form id="chat-form" class="flex items-center space-x-2">

<div class="relative flex-1">

<input

id="message-input"

type="text"

placeholder="Type your message here..."

class="w-full px-4 py-3 rounded-full border border-gray-300 focus:outline-none focus:ring-2 focus:ring-indigo-500 focus:border-transparent pr-10"

>

<button

id="model-select-btn"

type="button"

class="absolute right-14 top-1/2 transform -translate-y-1/2 text-gray-400 hover:text-indigo-600"

title="Select model"

>

<i class="fas fa-cog"></i>

</button>

</div>

<button

type="submit"

class="bg-indigo-600 text-white p-3 rounded-full hover:bg-indigo-700 transition-colors focus:outline-none focus:ring-2 focus:ring-indigo-500 focus:ring-offset-2"

>

<i class="fas fa-paper-plane"></i>

</button>

</form>

<div id="model-dropdown" class="hidden absolute right-8 bottom-20 bg-white shadow-lg rounded-lg border border-gray-200 p-3 z-10">

<div class="text-sm font-medium text-gray-700 mb-2">Select Model</div>

<select id="model-select" class="w-full p-2 border border-gray-300 rounded focus:outline-none focus:ring-2 focus:ring-indigo-500">

<option value="deepseek-r1:1.5b">deepseek-r1:1.5b</option>

<option value="llama3:8b">llama3:8b</option>

<option value="mistral:7b">mistral:7b</option>

</select>

</div>

</div>

</div>

</div>

<script>

document.addEventListener('DOMContentLoaded', () => {

const messageContainer = document.getElementById('message-container');

const chatForm = document.getElementById('chat-form');

const messageInput = document.getElementById('message-input');

const modelSelectBtn = document.getElementById('model-select-btn');

const modelDropdown = document.getElementById('model-dropdown');

const modelSelect = document.getElementById('model-select');

let currentModel = 'deepseek-r1:1.5b';

let isStreaming = false;

let eventSource = null;

// Toggle model dropdown

modelSelectBtn.addEventListener('click', () => {

modelDropdown.classList.toggle('hidden');

});

// Close dropdown when clicking outside

document.addEventListener('click', (e) => {

if (!modelSelectBtn.contains(e.target) && !modelDropdown.contains(e.target)) {

modelDropdown.classList.add('hidden');

}

});

// Update model when selected

modelSelect.addEventListener('change', () => {

currentModel = modelSelect.value;

modelDropdown.classList.add('hidden');

});

// Handle form submission

chatForm.addEventListener('submit', (e) => {

e.preventDefault();

const message = messageInput.value.trim();

if (!message || isStreaming) return;

// Add user message to chat

addMessage(message, 'user');

messageInput.value = '';

// Create typing indicator

const aiMessageContainer = document.createElement('div');

aiMessageContainer.className = 'ai-message message self-start';

aiMessageContainer.innerHTML = `

<div class="typing-indicator">

<span></span>

<span></span>

<span></span>

</div>

`;

messageContainer.querySelector('.flex').appendChild(aiMessageContainer);

scrollToBottom();

// Start streaming response

streamResponse(message, aiMessageContainer);

});

function addMessage(text, sender) {

const messageElement = document.createElement('div');

messageElement.className = sender === 'user' ? 'user-message message self-end' : 'ai-message message self-start';

messageElement.innerHTML = `<p>${text}</p>`;

const messagesContainer = messageContainer.querySelector('.flex');

messagesContainer.appendChild(messageElement);

scrollToBottom();

}

function streamResponse(message, aiMessageContainer) {

isStreaming = true;

// Encode parameters for URL

const encodedMessage = encodeURIComponent(message);

const encodedModel = encodeURIComponent(currentModel);

const apiUrl = `http://localhost:7080/api/v1/ollama/generate_stream?model=${encodedModel}&message=${encodedMessage}`;

// Close any existing connection

if (eventSource) {

eventSource.close();

}

// Create new EventSource connection

eventSource = new EventSource(apiUrl);

let responseText = '';

eventSource.onmessage = (event) => {

try {

const data = JSON.parse(event.data);

// Extract text from the response

const text = data.result?.output?.text || '';

if (text) {

responseText += text;

aiMessageContainer.innerHTML = `<p>${responseText}</p>`;

scrollToBottom();

}

// Check if streaming is finished

if (data.result?.metadata?.finishReason === 'stop') {

eventSource.close();

isStreaming = false;

}

} catch (error) {

console.error('Error parsing event data:', error);

}

};

eventSource.onerror = (error) => {

console.error('EventSource error:', error);

eventSource.close();

isStreaming = false;

// Replace typing indicator with error message if no response was received

if (!responseText) {

aiMessageContainer.innerHTML = `<p class="text-red-500">Sorry, there was an error connecting to the AI service.</p>`;

}

};

}

function scrollToBottom() {

messageContainer.scrollTop = messageContainer.scrollHeight;

}

});

</script>

</body>

</html>

上图就是一个最终效果了,但这个里还有一些展示的小问题。不过没关系,后续都会这里,这里让它可以调试通就可以了。

在这里可以发挥想象,设计一个UI效果。AI 是可以传图的,有草图在结合提问,效果会更好。

赞助